garrettwilkin.me

A technologist seeking meaningful work, challenging problems, and an inspiring team.

Certifications

AWS Certified Solutions Architect – Associate

Work

Current

- BDN Maine - Lead Developer - Product Development & DevOps since October 2014.

Previous

- Parse.ly - Developer Evangelist

-

Civic Hacking

- Code for America brigade Co-Captain of Code for Maine 2013 - 2014.

-

Non-profit Leadership & Education

- Co-founder & Board member Hacktivate, Connecting makers Since 2013.

Projects

AWS Migration - September 2016

- I recommended, planned, and executed a complete migration into Amazon Web Services of all web serving systems in use by the Bangor Publishing Company.

- Consolidating our services on AwS produced a 33% reduction in overall monthly hosting costs.

- In order to benefit as much as possible from Amazon's engineering and support I recommended adopting AWS managed services wherever possible, including:

- RDS - Relational Database Service

- VPC - Virtual Private Cloud

- Security Groups

- Subnets and Subnet Groups

- EC2 - Elastic Compute Cluster

- Auto scaling

- AMIs - Amazon Machine Images

- ElastiCache - Managed memcached

- Cloudfront - Content delivery & full page caching

- S3 - Storage and serving of static assets

- Route53 - DNS

- Lamdba - On demand runtimes

- Cloudwatch - Monitoring and alerting

- Post migration I trained additional newsroom staff to monitor and report system stability incidents.

Tools

My typical tools of choice include:

- Python - flask, django, etc.

- Javascript - node.js server side or in the browser.

- Amazon Web Services.

- ansible - for repeatable deployment and configuration.

- AWS Cloudwatch or statsd + graphite - for monitoring.

- git - for version control

- Postgres, MySQL, or Mongo at the database layer.

- Elasticsearch - You know, for search.

- Wordpress - the CMS that just works.

- Pagerduty - for alerting.

- Jenkins - for continuous integration & delivery

Open source matters. I feel a deep sense of gratitude to the open source communities that have produced many of the applications I use in the solutions I build. I look forward to making my first open source contribution this year.

Remote Work

I worked remotely from the countryside of Maine for 4 years before accepting my current full time on site office workplace role.

I prefer to use google hangouts, docs, slack and other apps for collaborating both in real-time and asynchronously with my colleagues and clients.

I love the freedom to live where I choose, and have seen the efficiencies that the remote collaboration workflow can bring.

I learned from experience that working remotely works better for me when I work from an office that is not also my home.

Conferences

It is really important to me to participate in the wider technical community. I just enjoy being around people that are passionate about building things because we help to inspire each others curiousity. I've been fortunate enough to be able to attend many conferences over the years, to have organized a few myself, and to have presented at a few as a speaker myself.

- 2016 Sep - ONA16 - Online News Association’s annual gathering.

- Went as a technologist seeking to understand the soul of journalism.

- 2015 Sep - Agents of Change

- Presented with BDN Maine colleagues Jake Emerson and Shannon Kinney on data, audience, and advertising.

- 2015 Jul - WordCamp Boston

- Attended with the BDN team.

- 2014 Sep - Code for America Summit

- My first in person meetup with Code for America crew from across the US.

- 2014 Jun - Hacking Journalism

- Partipated as an event sponsor (and hackathon entrant) as an evangelist for Parse.ly's analytics platform.

- 2014 Jun - Maine Civic Hack Day

- A bigger and better version of MCHD 2013. More problems, more participants, more awesome!

- 2013 Nov - Ultimate Developer Event

- Presented on Civic hacking and building community (with laryngitis).

- 2013 Aug - BDN What’s NEXT

- Panelist on tech innovation in Maine

- 2013 Jun - Maine Civic Hack Day

- Organized my first event!

- 2012 Mar - SXSW Interactive

- Attended as press with ProgrammableWeb

- 2012 Mar - Angel Hack Boston

- Second hackathon!

- 2011 Nov - Music Hackday

- My first hackathon!

Infrequently Asked Questions

If you could have one super power (and only one), which one would you choose?

If I could have one and only one super power, it would be the ability to wield stone through telekenesis. Stone is a long term material.It is sturdy and will stand the test of time. I seek to create a lasting postive effect on the world, and that's why this super power appeals to me. Which one would you choose?

Tell me about a time when you encouraged the adoption of a new tool to a set of users?

I lead the adoption of DevOps culture at the Bangor Daily News.

The essential tools and processes we adopted include:

- Storing configuration in source control (GitHub).

- Repeatable deployments through ansible playbooks.

- Multi-stage development and deployment environments: (development, staging, performance, and production).

- Monitoring resource utilization, specifically on web serving and database instances.

How about some charts?

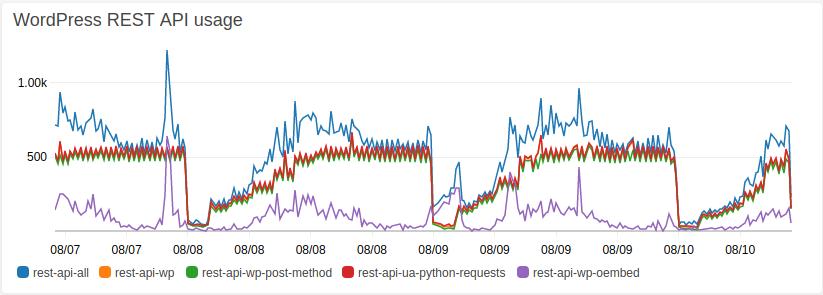

Here's a chart of a bit of custom metrics monitoring I configured for the WordPress REST api, which we run in production at bangordailynews.com.

Our Lambda function activity, represented in the rest-api-python-ua-requests series, is the single largest source of WordPress REST API traffic.

The WordPress REST API officially landed in v4.6. Version v4.8 has been released and received a maintenance patch, but its still a relatively lightly used feature of WordPress content syndication compared to the amount of traffic that we see from bots consuming HTML or RSS. We are still the most active consumers of our own API.

Our primary use case for the WordPress REST API is to ingest analytics data. We recently launched two AWS lambda functions that poll Google Analytics 360 for both realtime and historical metrics. The metrics are joined to our articles (WordPress posts) using the path portion of the URL. Once we've determined which metrics match to which WordPress post, we can then update the post with that metrics data.

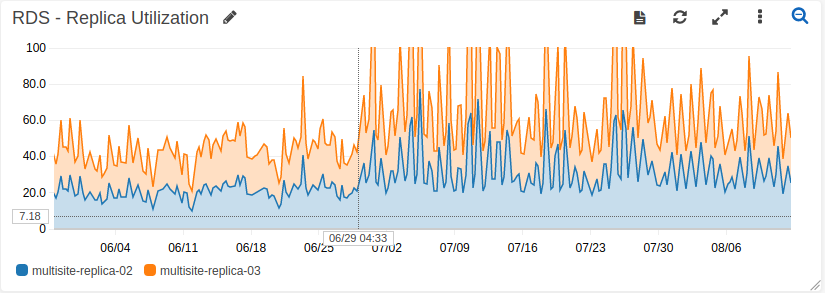

Shortly after launching these lambda functions we noticed a marked increase in CPU utilization in our Aurora RDS cluster.

After admitting that it really was likely that the lamdba functions were causing this increased CPU Utilization, the simplest possible way to reduce utilization over all would simply be to run the functions less frequently. The function for handling real time performance metrics would only update around 30 posts in WordPress per invocation, but the function for processing historical metrics would update as many as 100 posts per run. This is simply because there are more unique URLs with significant traffic over a longer time period.

The historical function actually took between 200 and 300 seconds to execute at each invocation, primarily due to the synchronous method in which it executes its HTTP requests. Since each transaction takes between 1-2 seconds on average, and the function has just over 100 API calls to make, it's easy to run into an execution time lasting 4+ minutes.

So we went into the AWS lambda console to the configuration details for the longer running historical lambda function, detached the Cloudwatch trigger on an "every five minutes" schedule from the historical function, and enabled a new "every ten minutes" trigger. Just like that, our peak CPU Utilization in the RDS cluster returned to a tolerable range.

A runtime duration of several minutes may seem ludicrous when you consider the 'quick, cheap, infrequent' use case scenario that lambda is meant to serve. But in our case, the incentive to further optimize the performance of this script is low for now. Running for 4-5 minutes out of every 10 minutes is still hardly 50% utilization, and the 128MB container size is ample, since the script only ever consumes ~30MB while running.

Even with this long runtime, scheduling the function to run every 10 minutes uses only about a third of the Lambda free tier allotment.Contact

Drop me a line, I'd love to hear from you.

- twitter - garrettwilkin

- email - garrett.wilkin@gmail.com

- github - garrettwilkin

- linkedin - Garrett Wilkin

- resume - GarrettWilkin - Lead Developer - 2017